Costs, Epilogue: Attacks on Water Plants? (Yawn)

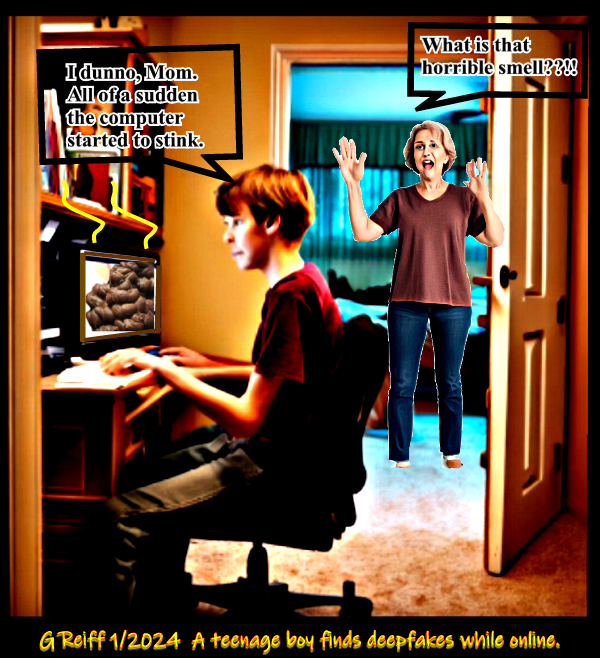

DeepFake

Porn Pics of Taylor Swift? Now That's Entertainment! Ah Jeez...

I am not a fan of Taylor Swift. To the best of my knowledge, I have never heard a song by Taylor Swift. With that bit of Truth In Advertising out of the way, I maintain that nothing illustrates the rot that permeates the Internet today more than does the deepfake porn pics of Taylor Swift. For as I posted on X (formerly Twitter), it is that the Internet is now so diseased and decrepit, that causes the Internet itself to reek of death, making the Internet appear to be dead.

About this imbroglio, there is much that needs to be said. The deepfakes were posted on X (formerly Twitter), but are now removed from the platform. According to Bing:

The deepfake images of Taylor Swift were posted on X (formerly

Twitter) on the night of Wednesday, January 25. The post featuring these

photos was viewed 47 million times before the account was eventually

suspended the following day. So, the images were on X for approximately

one day before they were removed. Please note that this is an

approximate duration and the exact time may vary. It's also important to

mention that X took action to remove all identified images and took

appropriate actions against the accounts responsible for posting them.

That X removed the offensive images and their poster only after the images were viewed 47 million times over an almost 24 hour period is, indeed, very weak sauce, as they say these days. When I posted on X about the cyberattack on Keenan & Associates, January 21, 2024, I first included the screen shot from a law firm soliciting for possible victims of the Keenan & Associates hack who may wish to sue for damages done to them in a class action lawsuit. I did not even finish writing the post after uploading the image when the post itself was deleted. It was as if some unseen person hit the backspace and removed by text in real time. After rewriting the post without the offending image, the post went up on X. So it is inconceivable to me that X did not have a hand, or at least tacit duplicity, in the publication and distribution of these deepfakes.

It has been widely reported, as did

Ars Technica on January 26, 2024, that the deepfakes were created

using Microsoft Designer,

the

Generative AI text-to-image technology. Any protestations by

Microsoft management that the tech giant could not have prevented

the generation of these images beforehand not withstanding. This, too, smacks of gross hypocrisy. As I have

often said, I use Microsoft's Designer technology to create the

original artwork seen on my webpages. For my article,

"Riddle Me This: When Is a Lie Not a Lie? When the Lie Is a Conspiracy

Theory, Like the Dead Internet Theory," posted January 14, 2024," I used Microsoft Cocreator in Paint to generate my Grim

Reaper image shown here on the left. Cocreator in Paint is the same technology as

MS Designer. In my prompt to generate the black and white image, I

used the phrase, "film

noir," to describe the type of black and white image I had sought. I could

not understand how my original textual prompt had violated any of

Microsoft's guidelines in generating images. Once I removed the

phrase, "film noir," the image to the left was generated.

the

Generative AI text-to-image technology. Any protestations by

Microsoft management that the tech giant could not have prevented

the generation of these images beforehand not withstanding. This, too, smacks of gross hypocrisy. As I have

often said, I use Microsoft's Designer technology to create the

original artwork seen on my webpages. For my article,

"Riddle Me This: When Is a Lie Not a Lie? When the Lie Is a Conspiracy

Theory, Like the Dead Internet Theory," posted January 14, 2024," I used Microsoft Cocreator in Paint to generate my Grim

Reaper image shown here on the left. Cocreator in Paint is the same technology as

MS Designer. In my prompt to generate the black and white image, I

used the phrase, "film

noir," to describe the type of black and white image I had sought. I could

not understand how my original textual prompt had violated any of

Microsoft's guidelines in generating images. Once I removed the

phrase, "film noir," the image to the left was generated.

Various reports maintained that Designer was the application that generated the deepfakes. On NBC Nightly News, and as was reported January 26, 2024, on the NBCNEWS website, Microsoft CEO Satya Nadella, implied its technology was not involved. Later, however, Microsoft released a statement that read as follows:

We take these reports very seriously and are committed to providing a

safe experience for everyone. We have investigated these reports and

have not been able to reproduce the explicit images in these reports.

Our content safety filters for explicit content were running and we have

found no evidence that they were bypassed so far. Out of an abundance of

caution, we have taken steps to strengthen our text filtering prompts

and address the misuse of our services.

It only follows that, if Microsoft's content filters could prevent me from generating an image using the phrase "film noir," then the same technology could prevent the tech giant's Generative AI technology from generating the offending images of Taylor Swift. If the reports about MS Designer being used in the creation of the deepfakes are true, then those filters failed in this instance. It seems that only after an incident has occurred, and the stuff hits the fan, do these companies take any affirmative remedial action. It is the same circumstance as in the Keenan & Associates breach, as it is in so many of these incidents surrounding technology. If there are stronger measures to be taken to prevent another future incident, then why were those same measures not taken before the incident in question had occurred?

The incident also is a clear illustration of what I call, "The Bro Culture," that infects so many users of our new technological tools. Quoting from an X posting the day the deepfakes went on to be seen 47 million times, PageSix reported, January 25, 2024, the culprit posted, "Bro what have I done… They might pass new laws because of my Taylor Swift post. If Netflix did a documentary about AI pics they’d put me in it as a villain. It’s never been so over."

It's a really simple concept to grasp here, Bro. You are the

villain in this arguable crime. But it

also

speaks volumes about how the Internet itself has rotted from its inside

out. If the Internet seems dead, it is partially due to too many

infantile juveniles, no matter their age, who are freely allowed to commit such

crimes — and offenses to the sensibilities of any sane person — but who are

rarely brought to justice for their offensive criminal actions. Our online platforms share in the guilt

for having destroyed the most wonderful invention for human

communication and interaction that once was the Internet.

also

speaks volumes about how the Internet itself has rotted from its inside

out. If the Internet seems dead, it is partially due to too many

infantile juveniles, no matter their age, who are freely allowed to commit such

crimes — and offenses to the sensibilities of any sane person — but who are

rarely brought to justice for their offensive criminal actions. Our online platforms share in the guilt

for having destroyed the most wonderful invention for human

communication and interaction that once was the Internet.

There is, however, a simple remedy and cure for what is sickening our Internet. February 12, 2023, in a post titled, "Stop the Whining. Stop the Finger Pointing. Hey Congress, Help Solve the Problems: Repeal Section 230 of the 1996 Communications Decency Act," I wrote that it is high time for Congress to repeal Section 230 of the 1996 Communications Decency Act. Section 230 was intended to protect this country's nascent tech industry from lawsuits brought against Internet companies. Such lawsuits would hold these companies liable for what they essentially published online. Fast forward to the present day. These tech giants are no longer fledgling little starlings in need of protection from Uncle Sam. These are media heavyweights in their own right. Their counterparts and competitors in broadcast and print media are most certainly held legally and ethically responsible for the content these traditional media outlets broadcast or print. The Taylor Swift episode makes this point convincingly and self-evident. It is high-time media centric Internet companies are judged liable for harms their content causes. If these images were published by Hustler magazine, let's say, Ms. Swift would have immediate recourse in the courts.

As I posited in another post, February 24, 2023, the emergence of Artificial Intelligence only reinforces the fact that it is people who need protections from the Titans of Tech, and not the other way around. Maybe this what Farmer Jay fears so much: Facebook, who paid for Farmer Jay's appearance, being held to account for what is presented on the Facebook website. Of course, I am sure Iran has more effective ways to destroy America than making websites liable for the content that appears on American websites.

Ooh that smell

Can't you smell that smell

Ooh that smell

The smell of death surrounds you

— That Smell, Lynyrd Skynyrd

Gerald Reiff

| Back to Top | ← previous post | next post → |

| If you find this article of value, please help keep the blog going by making a contribution at GoFundMe or Paypal | ||