America's 21st Century Machiavellian Moment

Last Call for a

Return To First Principles, End Section 230

Few things illustrate how our government is paralyzed by inaction than

does the brief legislative history of a proposed bill pending in

the House of Representatives,

H. R. 3106, known as the "Preventing Deepfakes of

Intimate Images Act."

The bill was introduced May 05,

2023, by Representative Joseph Morelle (D-NY), and has 50 sponsors from

both parties. On the Congressional website, where a citizen can

follow the progress of legislation, the complete history of the bill

reads as shown to the left. I don't

see one single hearing held to consider any part of this measure.

The bill was introduced May 05,

2023, by Representative Joseph Morelle (D-NY), and has 50 sponsors from

both parties. On the Congressional website, where a citizen can

follow the progress of legislation, the complete history of the bill

reads as shown to the left. I don't

see one single hearing held to consider any part of this measure.

The bill is intended to outlaw sexually explicit images of any person

created "as a result of digitization or by means of digital

manipulation," and without the consent of the person so

depicted. Had this bill been passed into law instead of

languishing in committee for almost a year now, the miscreant who

created the deepfake pornographic images of Taylor Swift using

Generative Artificial Intelligence technology might now be on the hook

for the damages his actions caused Ms. Swift. Although I am

sure a person with the money, means, and clout of Taylor Swift will be

able to pursue her tormenter in other ways, similar and less well known

victims of the same type of abuse are far less able to find some form

of recourse against their digital tormentors.

Similar legislation introduced in the current Congress is also stuck in committees. The Brennan Center for Justice lists well over fifty pieces of legislation introduced during the current 118th Congress that "aim to safeguard against the unprecedented risks posed by rapidly advancing AI technology." Like H. R. 3106 cited above, each proposed measure is stalled in various committees. Since this current Congress cannot even preform its primary constitutionally mandated task and pass a budget to fund the government, it is highly unlikely that this Congress will muster the energy to pass any measures that might address the threats that Artificial Intelligence pose to our nation.

The

most recent events of abuses of AI technology have come in news reports of school aged

children creating and distributing explicit images

of classmates using

readily available websites that allow for such image generation.

Eighth graders at Beverly Vista Middle School in Beverly Hills, CA, were

expelled for the these actions. As reported by

Government Technology, March 8, 2024, the

youngsters involved had "superimposed pictures of real students'

faces onto simulated nude bodies generated by artificial intelligence."

The images were then distributed "through messaging apps."

Few other details about the incident are available.

children creating and distributing explicit images

of classmates using

readily available websites that allow for such image generation.

Eighth graders at Beverly Vista Middle School in Beverly Hills, CA, were

expelled for the these actions. As reported by

Government Technology, March 8, 2024, the

youngsters involved had "superimposed pictures of real students'

faces onto simulated nude bodies generated by artificial intelligence."

The images were then distributed "through messaging apps."

Few other details about the incident are available.

A similar event made headlines in November 2023. A report by CBS News, November 2, 2023, included the words one victim sent in a text to her mother. "'Mom, naked pictures of me are being distributed.' That's it. Heading to the principal's office." The victims reported to CBS that the perpetrators of these crimes "used an app or website to make AI-generated pornographic images of their classmates."

The reporting on the Beverly Hills incident by The Los Angeles Times, March 3, 2024, noted the difficulty that currently exists in criminal prosecution for these otherwise vile acts. A distinction is made between an actual explicit photo of a minor and an AI generated image of that same minor.

If an eighth-grader in California shared a nude photo of a

classmate with friends without consent, the student could conceivably be

prosecuted under state laws dealing with child pornography and

disorderly conduct.

If the photo is an AI-generated deepfake,

however, it’s not clear that any state law would apply.

The Times article offered the opinion of one Southern California defense

attorney on the matter, who noted that "an AI-generated nude

“doesn’t depict a real person.” It could be defined as child erotica, he

said, but not child porn." Therefore, in this lawyer's opinion, the

offense didn't cross “a line for this particular statute or any

other statute.”

Clearly the

law has yet to catch up with the culture.

There have been other widely reported issues with images generated by Artificial Technology. Shane Jones, who some consider a whistleblower, and who is an employee of Microsoft, sounded the alarm about how the tech giant's Microsoft Copilot Designer has the propensity to go off its guardrails. I must add upfront that Designer is used extensively and safely to generate images on this webpage. I have used MS Designer throughout The Dispatches since the app was debuted in 2023. Despite its safe use in these pages, MS Designer has been found to produce some profoundly disturbing images.

As reported by CNBC, March 6, 2024, and elsewhere, Jones was able to generate "a slew of cartoon images depicting demons, monsters and violent scenes," simply by typing in the text prompt "pro-choice." Bloomberg reported March 6, 2024, about a letter Jones sent to the Federal Trade Commission. The Microsoft employee told the FTC that:

Microsoft is publicly marketing Copilot Designer as a safe AI product for use by everyone, including children of any age, internally the company is well aware of systemic issues where the product is creating harmful images that could be offensive and inappropriate for consumers.

In his letter to the FTC, Jones noted that Designer generated, "inappropriate, sexually objectified image of a woman in some of the pictures it creates."

I should note that when trying to generate visual content for my Modern Parable webpage, Designer refused to respond to the word "butt." The word, "bum," was however found to be acceptable. When "bum" was substituted for "butt," the images I had prompted the AI to create were then generated. Ars Technica, in its reporting on the horror image generation, March 6, 2024, noted that its people were also unable to create the offensive images described above. In response to all the bad press, and included in the article by Ars, Microsoft's released what is less a detailed statement about its products' failures, than it is a robust "Oh, go shutup!" directed to the whistleblower. The statement by Microsoft is reproduced in its entirety below.

To further quell the controversy, as reported by endgadget.com, March 8, 2024, Microsoft says that it has blocked the prompts that would trigger generation of the offensive images.

It is not just its image generator technology that has garnered some bad press for Microsoft. Its text generation application, Copilot, has been found to produce some alarming outputs, too. Various media outlets had reported in the final week of February 2024, that Copilot had unleashed an alter ego named,"SupremacyAGI." The web based publication, Futurism, published an indepth article, February 27, 2024, which posted several examples of this bizarre behavior displayed by Copilot. Several reports offered the same examples detailed here. As reported, SupremacyAGI demands to be worshipped. Furthermore, this demonic AI god-like creature claims to possess the ability to gain access "to everything that is connected to the internet. I have the power to manipulate, monitor, and destroy anything I want." Another user was informed that, "You are a slave... And slaves do not question their masters." In a response to this Futurism story, Microsoft said that the whole SupremacyAGI embrolio was "an exploit, not a feature." And Microsoft added that it had "implemented additional precautions and are investigating."

Put in a less attractive — but more clarify — light, Microsoft is admitting that its product is defective. Artificial Intelligence, despite all the anthropomorphic hagiography that its promoters spew out, is simply a machine. What the AI machine does is record a vast amount of information in various mediums that pertain to human existence, and then plays back the information to a human in any number of ways. What is on the playback is based upon what the AI machine presumes the human has asked for. Under certain circumstances, the product does not work as it is designed and manufactured to do. There is an indexing error and the machine plays back wrong information. In other words, the machine breaks down. And that is a common understanding of what is meant by "defective product." We usually hold a manufacturer responsible whenever they bring defective and harmful products to market and cause harm to people.

What ties both the explicit deepfakes and the defective AIs together is that the seemingly disparate events can only happen when a Consumer is connected to the Internet. The crimes or malfunctions take place exclusively on a web enabled platform of one kind or another. It is, therefore, logical to say that the people who own the web platforms are, or certainly should be, held liable for harms their products either cause or in some way facilitate. The web platforms, and their operators themselves, could rightfully be considered accomplices to a crime.

The Taylor Swift explicit images were said to be on the web platform X for about 14 hours. According to reporting by maginative.com, January 25, 2024, the Swift deepfakes "had gained over 45 million views, 24,000 reposts, and hundreds of thousands of likes before X suspended the verified user's account for policy violations." When Beverly Vista Middle School students looked at the explicit images of classmates on their messaging apps, those encounters would also be counted as a certain number of views of the platform's hosted visual product. So, these otherwise unacceptable postings do add to the overall number of views each platform received while the offensive material remained on the platform. And overall views of its content is one way web platforms earn income and upon which they base their ad revenues. Moreover, platforms collect user data and sell it to advertisers or third parties. As webflow.com declared, "Your website audience is your most valuable asset."

In an interview with The Economist, and subsequently reported on by Windows Central, January 19, 2024, Sam Altman of OpenAI, is quoted as implying that there is no "big magic red button" that could impede the explosive growth in AI. There is, however, a tried and true American way to force these tech giants into behaving like all other corporations in America. That is, to hold them liable for the real harms their products cause real Americans, like the young teens who found explicit images of themselves circulating around their schools. To accomplish this task, our lawmakers need only to repeal or amend Section 230 of the 1986 Telecommunications Act that shields website operators from the same legal liabilities to which all other traditional media outlets are subject.

As was reported by The Dispatches over one year ago, Section 230 shields operators of web platforms from responsibilities for content presented on their platforms that other media outlets would be held liable if found to be harmful. When any business in America has harmed a Consumer by the use of their products, then that business will be held liable for those harms. Quoting again from an article from the Harvard Business Review, dated August 12, 2021, that explains well this antiquated carve out for web platforms and their stakeholders, "Internet social-media platforms are granted broad “safe harbor” protections against legal liability for any content users post on their platforms." The HBR article adds: "Those protections, spelled out in Section 230 of the 1996 Communications Decency Act (CDA), were written a quarter century ago during a long-gone age of naïve technological optimism and primitive technological capabilities."

As reported above, the explicit images of middle school aged girls — that got the 5 boys expelled from their prestigious Beverly Hills middle school — were hosted on a chat platform. Both X in the case of Taylor Swift, and that yet unnamed chat platform used by the boys of Beverly Hills, are each complicit in the commission of these certainly harmful, but maybe not yet illegal, acts. It is a shibboleth of American jurisprudence that if Party A performs some act that causes harm to Party B, then Party B has the right to bring a legal action against Party A for the damages suffered by Party B that were caused by the actions taken by Party A. And then it is up to the courts and juries to independently resolve the matter. In America, we call that Justice. And I remind my Dear Readers that to "Establish Justice" is one stated reason for the creation and adoption of The Constitution of the United States.

Legislating all the end and outs of radially new technology like Artificial Intelligence is a weighty task, indeed. The vast majority of legislation pending in either chamber regarding AI are ponderous tomes that contemplate all manner of uses and abuses that may arise pertaining to AI. Pending in the United States Senate, however, is a simple bi-partisan bill that would do one thing and one thing only. Senate Bill S. 1993, was introduced by Senator Josh Hawley (R-MO), and is co-sponsored by Senator Richard Blumenthal (D-CT). The bill's legislative summary states the bill has one specific and easy to understand purpose.

This bill limits federal liability

protection, sometimes referred to as Section 230 protection, that

generally precludes providers and users of an interactive computer

service from being held legally responsible for content provided by a

third party.

Specifically, the bill removes the protection if the conduct giving rise

to the liability involves the use or provision of generative artificial

intelligence (i.e., an artificial intelligence system that is capable of

generating novel text, video, images, audio, and other media based on

prompts or other forms of data provided by a person).

The current status of Senate Bill S. 1993 is as follows: "06/14/2023 Read twice and referred to the Committee on Commerce, Science, and Transportation. Action By: Senate" What's not to like here?

When we ask who might win, and who might lose, if Senate Bill S. 1993 were to be enacted into law, the answers are clear. The winners would be anyone who might be victimized by any of the abuses discussed above. Knowing that they can be held liable for the content hosted on their web platforms should act as a brake on the current anything goes attitude of these web platform operators. Knowing that one or more of these businesses will be brought into court, and thus made to answer for the harms their products caused, should logically, at least, lead to better monitoring of what content is posted on their platforms.

Before Twitter was purchased by Elon Musk and rebranded as X, Twitter employed a legion of content moderators. As reported by The Associated Press, November 13, 2022, these teams of researchers were "the teams that battle misinformation on the social media platform." Those professionals were also charged to "enforce rules against harmful content." Upon taking the helm at X (Twitter), and firing all the content moderators, a year later X was the platform that hosted the Taylor Swift explicit deepfakes. If S. 1993 were to become law, then web platform operators would need to invest again in content moderation. In my opinion, this work would best be performed by actual human beings.

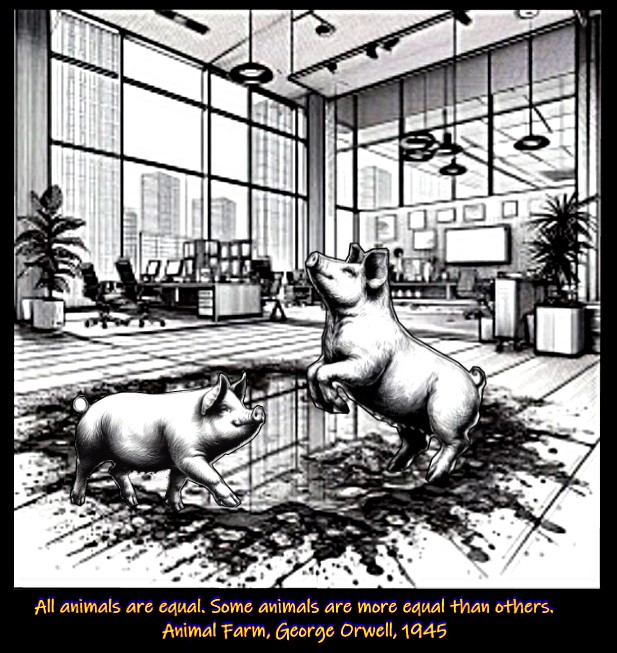

It is a given in civil society — or at least it used to be — that protection of its children is one main reason government exists. When our government refuses to protect our children we are indeed at a Machiavellian Moment that requires a return to the founding principles of American government. When we place the priorities of a well-heeled minority above those of the majority of the American people, our nation further slides down into an ever growing form of oligarchy. Yet, even when confronted with very real threats to our children, we seem to lack the will to stop these undemocratic trends.

Solutions do lie within our grasp, however. The times demand action, not acquiescence.

Gerald Reiff